Additionally, intelligent bin-packing of Pods onto available nodes, drives optimal cluster utilization and cost reduction.

Pod-driven autoscaling that out-of-the-box, takes into consideration Pod requirements, and rapidly spins up (or down), the relevant nodes so your workloads always will have sufficient resources.Some of the key benefits of Ocean include: It continuously monitors and optimizes infrastructure to meet containers’ needs, ensuring the best pricing, lifecycle, performance, and availability. Ocean by Spot is an infrastructure automation and optimization solution for containers in the cloud (working with EKS, GKE, AKS, ECS and other orchestration options). The serverless experience for Kubernetes infrastructure Let’s take a look at a turn-key alternative and then how it supports Spark workloads. auto scaling groups) which might be a heavy burden for some teams. But this requires significant configuration and ongoing management of Cluster-Autoscaler and all associated components (e.g.

Of course one can take a do-it-yourself approach using the open-source Cluster-Autoscaler. With most workloads being quite dynamic, scaling instances up and down will require some form of Kubernetes infrastructure autoscaling. For Kubernetes to manage all the different workloads, Spark or otherwise, it needs some underlying compute infrastructure. While this sounds great, we’re still missing one key ingredient.

#KUBERNETES INSTALL APACHE SPARK ON KUBERNETES HOW TO#

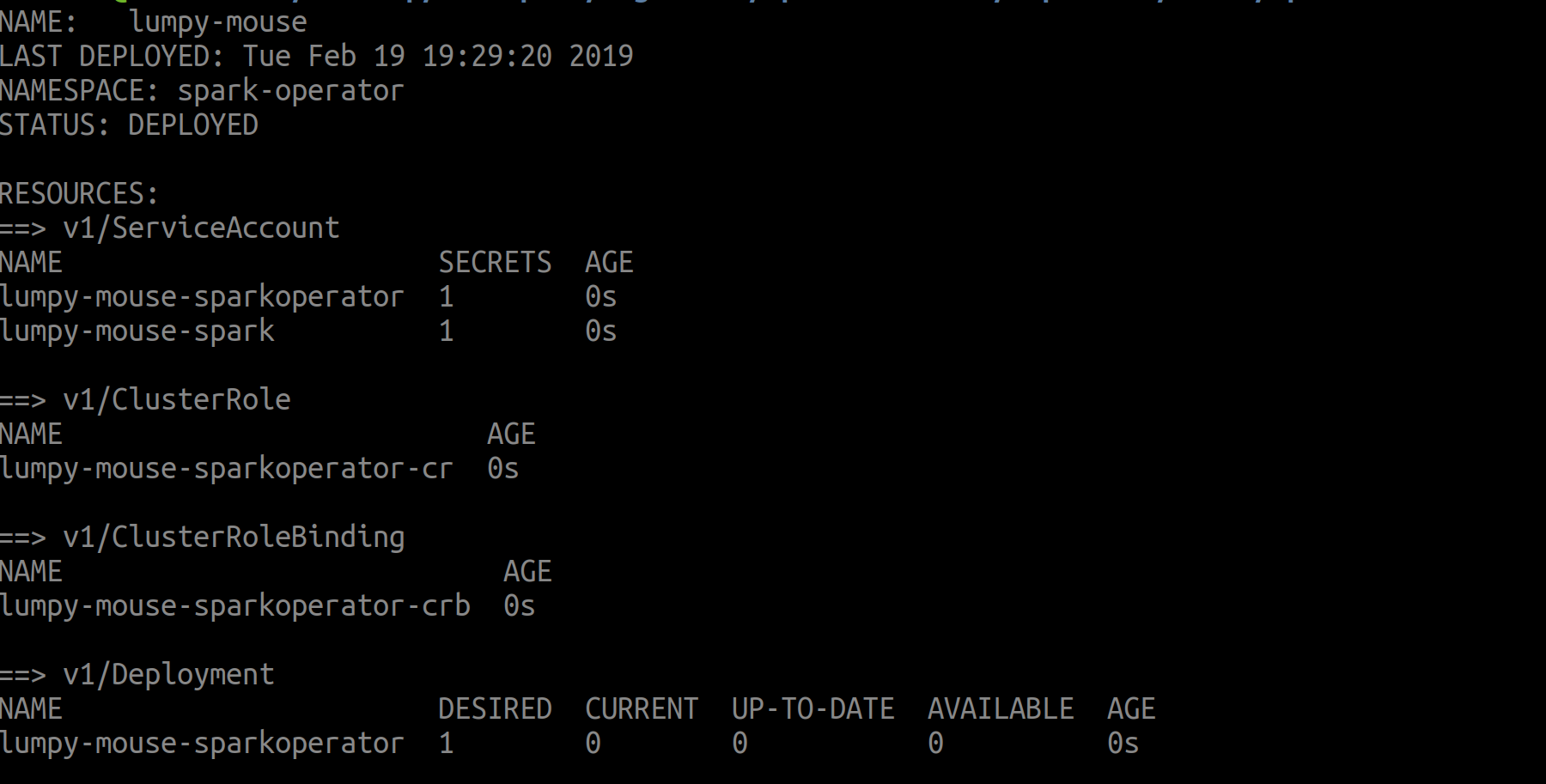

In this post, we will be focusing on how to run Apache Spark on Kubernetes without having to handle the nitty-gritty details of infrastructure management. These resources can either be a group of VMs that are configured and installed to act as a Spark cluster or as is becoming increasingly common, use Kubernetes pods that will act as the underlying infrastructure for the Spark cluster. While Spark manages the scheduling and processing needed for big data workloads and applications, it requires resources like vCPUs and memory to run on. As an open-source, distributed, general-purpose cluster-computing framework, Apache Spark is popular for machine learning, data processing, ETL, and data streaming.

0 kommentar(er)

0 kommentar(er)